As is the case with many other developers, the reports over the last few years of the huge energy requirements of the web have prompted me to take a look at my own websites and see what I can do to minimize their impact. This piece will cover some of my experiences in doing this, as well as my current thoughts on optimizing websites for carbon emissions, and some practical examples of things you can do to improve your own pages.

But first, a confession: When I first heard about the environmental impact of websites, I didn’t quite believe it. After all, digital is supposed to be better for the planet, isn’t it?

I’ve been involved in various green and environmental groups for decades. In all of that time, I can’t consciously remember anyone ever discussing the possible environmental impacts of the web. The focus was always on reducing consumption and moving away from burning fossil fuels. The only time the Internet was mentioned was as a tool for communicating with one another without the need to chop down more trees, or for working without a commute.

So, when people first started talking about the Internet having similar carbon emissions to the airline industry, I was a bit skeptical.

Emissions

It can be hard to visualize the huge network of hardware that allows you to send a request for a page to a server and then receive a response back. Most of us don’t live in data centers, and the cables that carry the signals from one computer to another are often buried beneath our feet. When you can’t see a process in action, the whole thing can feel a little bit like magic — something that isn’t helped by the insistence of certain companies on adding words like “cloud” and “serverless” to their product names.

As a result of this, my view of the Internet for a long time was a little ephemeral, a sort of mirage. When I started writing this article, however, I performed a little thought experiment: How many pieces of hardware does a signal travel through from the computer I’m writing at to get outside of the house?

The answer was quite shocking: 3 cat cables, a switch, 2 powerline adapters, a router and modem, an RJ11 cable, and several meters of electrical wiring. Suddenly, that mirage was beginning to look rather more solid.

Of course, the web (any, by extension, the websites we make) does have a carbon footprint. All of the servers, routers, switches, modems, repeaters, telephone cabinets, optical-to-electrical converters, and satellite uplinks of the Internet must be built from metals extracted from the Earth, and from plastics refined from crude oil. To then provide data to the estimated 20 billion connected devices worldwide, they need to consume electricity, which also releases carbon when it is generated (even renewable electricity is not carbon neutral, although it is a lot better than fossil fuels).

Accurately measuring just what those emissions are is probably impossible — each device is different and the energy that powers them can vary over the course of a day — but we can get a rough idea by looking at typical figures for power consumption, user bases, and so on. One tool that uses this data to estimate the carbon emissions of a single page is the Website Carbon Calculator. According to it, the average page tested “produces 1.76 grams of CO2 per page view”.

If you’ve been used to thinking about the work you do as essentially harmless to the environment, this realization can be quite disheartening. The good news is that, as developers, we can do an awful lot about it.

Recommended reading: How Improving Website Performance Can Help Save The Planet

Performance And Emissions

If we remember that viewing websites uses electricity and that producing electricity releases carbon, then we’ll know that the emissions of a page must heavily depend on the amount of work that both the server and client have to perform in order to display the page. Also, the amount of data that is required for the page, and the complexity of the route it must travel through, will determine the amount of carbon released by the network itself.

For example, downloading and rendering example.com will likely consume far less electricity than Apple’s home page, and it will also be much quicker. In effect, what we are saying is that high emissions and slow page loads are just two symptoms of the same underlying causes.

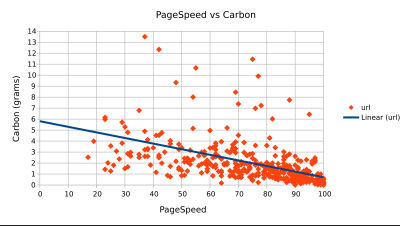

It’s all very well to talk about this relationship in theory, of course, but having some real-world data to back it up would be nice. To do just that, I decided to conduct a little study. I wrote a simple command-line interface program to take a list of the 500 most popular websites on the Internet, according to MOZ, and check their home pages against both Google’s PageSpeed Insights and the Website Carbon Calculator.

Some of the checks timed out (often because the page in question simply took too long to load), but in total, I managed to collect results for over 400 pages on 14 July 2021. You can download the summary of results to examine yourself but to provide a visual indication, I have plotted them in the chart below:

As you can see, while the variation between individual websites is very high, there is a strong trend towards lower emissions from faster pages. The mean average emissions for websites with a PageSpeed score of 100 is about 1 gram of carbon, which rises to a projected almost 6 grams for websites with a score of 0. I find it slightly reassuring that, despite there being many websites with very low speeds and high emissions, most of the results are clustered in the bottom right of the chart.

Taking Action

Once we understand that much of a page’s emissions originate from poor performance, we can start taking steps to reduce them. Many of the things that contribute to a website’s emissions are beyond our control as developers. We can’t, for example, choose the devices that our users access our pages from or decide on the network infrastructure that their requests travel through, but we can take steps to improve our websites’ performance.

Performance optimization is a broad topic, and many of you reading this likely have more experience than I do, but I would like to briefly mention a few things that I have observed recently when optimizing various pages’ loading speed and carbon emissions.

Rendering Is Much Slower on Mobile

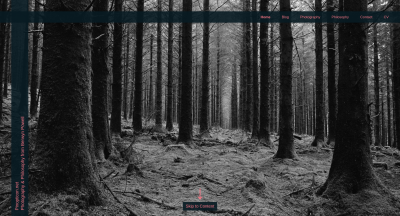

I recently reworked the design of my personal blog in order to make it a little more user-friendly. One of my hobbies is photography, and the website had previously featured a full-height header image.

While the design did a good job of showcasing my photographs, it was a complete pain to scroll past, especially when moving through pages of blog posts. I didn’t want to lose the feeling of having a photo in the header, however, and eventually settled on using it as a background for the page title.

The full-height header had been using srcset in order to make loading as fast as possible, but the images were still very big on high-resolution screens, and my longest contentful paint (LCP) time on mobile for the old design was almost 3 seconds. A big advantage of the new design was that it allowed me to make the images much smaller, which reduced the LCP time to about 1.5 seconds.

On laptops and desktops, people wouldn’t have noticed a difference, because both versions were well under a second, but on much less powerful mobile devices, it was quite dramatic. What was the effect of this change on carbon emissions? 0.31 grams per view before, 0.05 grams after. Decoding and rendering images is very resource-intensive, and this grows exponentially as the images get bigger.

The size of images isn’t the only thing that can have an impact on the time to decode; the format is important as well. Google’s Lighthouse often recommends serving images in next-generation formats to reduce the amount of data that needs to be downloaded, but new formats are often slower to decode, especially on mobile. Sending less data over the wire is better for the environment, but it is possible that consuming more energy to decode could offset that benefit. As with most things, testing is key here.

From my own testing in trying to add support for AVIF encoding to the Zola static site generator, I found that AVIF, which promises much smaller file sizes than JPG at the same quality, took orders of magnitude longer to encode; something that bunny.net’s observation that WebP outperforms AVIF by as much as 100 times supports. While doing this, the server will be consuming electricity, and I do wonder whether, for websites with low visitor counts, switching to the new format might actually end up increasing emissions and reducing performance.

Images, of course, are not the only component of modern web pages that take a long time to process. Small JavaScript files, depending on what they are doing, can take a long time to execute and the same potential pitfalls as images will apply.

Recommended reading: The Humble img Element And Core Web Vitals

Round-Trips Add Up

Another thing that can have a surprising impact on performance and emissions is where your data is coming from. Conventional wisdom has long said that serving assets such as frameworks from a central content delivery network (CDN) will improve performance because getting data from local nodes is generally faster for users than from a central server. jQuery, for example, has the option to be loaded from a CDN, and its maintainers say that this can improve performance, but real-world testing by Harry Roberts has shown that self-hosting assets is generally faster.

This has also been my experience. I recently helped a gaming website improve its performance. The website was using a fairly large CSS framework and loading all of its third-party assets via a CDN. We switched to self-hosting all assets and removed unused components from the framework.

None of the optimizations resulted in any visual changes to the website, but together they increased the Lighthouse score from 72 to 98 and reduced carbon emissions from 0.26 grams per view to 0.15.

Send Only What You Need

This leads nicely onto the subject of sending users only the data they actually need. I’ve worked on (and visited) many, many websites that are dominated by stock images of people in suits smiling at one another. There seems to be a mentality amongst certain organizations that what they do is really boring and that adding photos will somehow convince the general public otherwise.

I can sort of understand the thinking behind this because there are numerous pieces on how the amount of time people spend reading is declining. Text, we are repeatedly told, is going out of fashion; all people are interested in now are videos and interactive experiences.

From that point of view, stock photos could be seen as a useful tool to liven up pages, but eye-tracking studies show that people ignore images that aren’t relevant. When people aren’t looking at your images, the images might as well be empty space. And when every byte costs money, contributes to climate change, and slows down loading times, it would be better for everyone if they actually were.

Again, what can be said for images can be said for everything else that isn’t the page’s core content. If something isn’t contributing to a user’s experience in a meaningful way, it shouldn’t be there. I’m not for a moment advocating that we all start serving unstyled pages — some people, such as those with dyslexia, do find large blocks of text difficult to read, and other users almost certainly would find such pages boring and go elsewhere — but we should look critically at every part of our websites to consider whether they are earning their keep.

Accessibility and the Environment

Another area where performance and emissions converge is in the field of accessibility. There is a common misconception that making websites accessible involves adding aria attributes and JavaScript to a page, but often what you leave out is more important than what you put in, making an accessible website relatively lightweight and performant.

Using Standard Elements

MDN Web Docs has some very good tutorials on accessibility. In “HTML: A Good Basis for Accessibility”, they cover how the best foundation of an accessible website lies in using the correct HTML elements for the content. One of the most interesting sections of the article is where they try to recreate the functionality of a button element using a div and custom JavaScript.

This is obviously a minimal example, but I thought it would be interesting to compare the size of this button version to one using standard HTML elements. The fake button example in this case weighs around 1,403 bytes uncompressed, whereas an actual button with less JavaScript and no styling weighs 746 bytes. The div button will also be semantically meaningless and, therefore, much harder for people with screen readers to use and for bots to parse.

Recommended reading: Accessible SVGs: Perfect Patterns For Screen Reader Users

When scaled up, these sorts of things make a difference. Parsing minimal markup and JavaScript is easier for a browser, just as it is easier for developers.

On a larger scale, I was recently refactoring the HTML of a website that I work on — doing things like removing redundant title attributes and replacing divs with more semantic equivalents. The original page had a structure like the following (content removed for privacy and brevity):

<div class="container"> <section> <div class="row"> <div class="col-md-3"> <aside> <!-- Sidebar content here --> </aside> </div> <div class="col-md-9"> <!-- Main content here --> <h4>Content piece heading</h4> <p> Some items;<br> Item 1 <br> Item 2 <br> Item 3 <br> <br> </p> <!-- More main content here --> </div> </div> </section>

</div>With the full content, this weighed 34,168 bytes.

After refactoring, the structure resembled this:

<div class="container"> <div class="row"> <main class="col-md-9 col-md-push-3"> <!-- Main content here --> <h3>Content piece heading</h3> <p>Some items;</p> <ul> <li>Item 1</li> <li>Item 2</li> <li>Item 3</li> </ul> <!-- More main content here --> </main> <aside class="col-md-3 col-md-pull-9"> <!-- Sidebar content here --> </aside> </div>

</div>It weighed 32,805 bytes.

The changes are currently ongoing, but already the markup is far more accessible according to WebAIM, Lighthouse, and manual testing. The file size has also gone down, and, when averaging the time from five profiles in Chrome, the time to parse the HTML has dropped by about 2 milliseconds.

These are obviously small changes and probably won’t make any perceptual difference to users. However, it is nice to know that every byte costs users and the environment — making a website accessible can also make it a little bit lighter as well.

Videos

Project Gutenberg’s HTML version of The Complete Works of William Shakespeare is approximately 7.4 MB uncompressed. According to Android Authority in “How Much Data Does YouTube Actually Use?”, a 360p YouTube video weighs about 5 to 7.5 MB per minute of footage and 1080p about 50 to 68. So, for the same amount of bandwidth as all of Shakespeare’s plays, you will get only about 7 seconds of high-definition video. Video is also very intensive to encode and decode, and this is probably a major contributing factor to estimates of Netflix’s carbon emissions being as high as 3.2 KG per hour.

Most videos rely on both visual and auditory components to communicate their message, and large files sizes require a certain level of connectivity. This obviously places limits on who can benefit from such content. Making video accessible is possible but far from simple, and many websites simply don’t bother.

If video was only ever treated as a form of progressive enhancement, this would perhaps not be a problem, but I have lost count of the number of times I have been searching for something on the web, and the only way to find the information I wanted was by watching a video. On YouTube, the average number of monthly users grew from 20 million in 2006 to 2 billion in 2020. Vimeo also has a continually growing user base.

Despite the huge number of visitors to video-sharing websites, many of the most popular ones do not seem to be fully compliant with accessibility legislation. In contrast to this, numerous types of assistive technologies are designed to make plain text accessible to as wide a variety of people as possible. Text is also easy to convert from one format to another, so it can be used in a number of different contexts.

As we can see from the example of Shakespeare, plain text is also incredibly space-efficient, and it has a far lower carbon footprint than any other form of human-friendly information transmitted on the web.

Video can be great, and many people learn best by watching a process in action, but it also leaves some people out and has an environmental cost. To keep our websites as lightweight and inclusive as possible, we should treat text as the primary form of communication wherever possible, and offer things such as audio and video as an extra.

Recommended Reading: Optimizing Video For Size And Quality

In Conclusion

Hopefully, this brief look at my experience in trying to make websites better for the environment has given you some ideas for things to try on your own websites. It can be quite disheartening to run a page through the Website Carbon Calculator and be told that it could be emitting hundreds of kilograms of CO2 a year. Fortunately, the sheer size of the web can amplify positive changes as well as negative ones, and even small improvements soon add up on websites with thousands of visitors a week.

Even though we are seeing things like a 25-year-old website increasing 39 times in size after a redesign, we are also seeing websites being made to use as little data as possible, and clever people are figuring out how to deliver WordPress in 7 KB. So, in order for us to reduce the carbon emissions of our websites, we need to make them faster — and that benefits everybody.